Serverless

Serverless is an architecture pattern that allows the user to not worry about servers. Of course servers still exist in a data center somewhere, but they do not concern the customer. These are many of the benefits to using this pattern.

Abstractions

“All problems in computer science can be solved by another level of indirection... Except for the problem of too many layers of indirection.” — David Wheeler

Serverless architecture abstracts the hardware from the software. In the same way that an operating system can provide a framework and environment without the user having to know where to put things in memory, the cloud allows you to write software without having to worry about how it is actually running.

Abstractions are useful because they allow us to think at a higher level. We can think in the solution space, rather than in unnecessary details like config file management and patching regimens. Many people have wasted many hours tracing down that config setting in their load balancer, and dealing with rolling out patches gradually so as to not interrupt service.

These things, and many more like it, must still happen, but they are done by experts, or at least people dedicated to doing that as their job. In Domain Driven Design we would classify this as a generic sub-domain; something that is required, but not core to the business goals. By abstracting the generic management stuff away, you are left with only the problem core to the business, which allows faster iteration on the solution that will generate income.

There are a lot of benefits to abstracting the server infrastructure out of your stack. If you are able to leverage the cloud completely to reach your business goal or solve your problem, you can significantly reduce cognitive overhead and maintenance, and increase development velocity.

Cognitive Overhead

When running a traditional infrastructure, there is a significant need to have a decently accurate mental model. Most production infrastructures are complex enough that it is nearly impossible to know every bit of it in sufficient detail. Some of this doesn't go away with serverless. Here are some of the benefits, in my mind:

In a traditional infrastructure you need to be able to predict and provision resources ahead of time so you don't go down. Serverless infrastructure is immutable by default and since you don't provision servers, and you don't have configuration files to manage, there are fewer moving parts. A traditional infrastructure could be set up in an immutable fashion, but you must go out of your way to set that up and to maintain it. Instead of thinking how many servers you need, you think in terms of what resources and services help you solve your problem.

A large part of the cognitive overhead of running a traditional infrastructure is maintenance. You need to be able to keep your servers up, running, and patched.

Maintenance

Maintenance is a big part of any robust infrastructure. With serverless you can minimize it. There are no servers to keep patched and updated, there are no application configuration files you need to store in puppet or chef.

There is still maintenance you need to perform for serverless. You may need to optimize functions and queries, manage log tracing and analytics, and prune unused resources occasionally.

Even with serverless, there is still the issue of the cloud provider messing up. Google and Amazon engineers are not infallible, and have often made configuration errors that resulted in downtime. Examples: Google storage downtime AWS S3 downtime

Most cloud resources have a lot of 9's of reliability, however. AWS S3 for instance is has 99.99% availability and 99.999999999% durability over a year. This means that there should be no more than 52 minutes of downtime, and if you store 10,000,000 objects you could expect to lose an object every 10,000 years. The experts at the cloud provider work hard to keep the backend up, so you don't have to. Your company doesn't have to have as much staff on hand to keep things running.

Scale to Zero

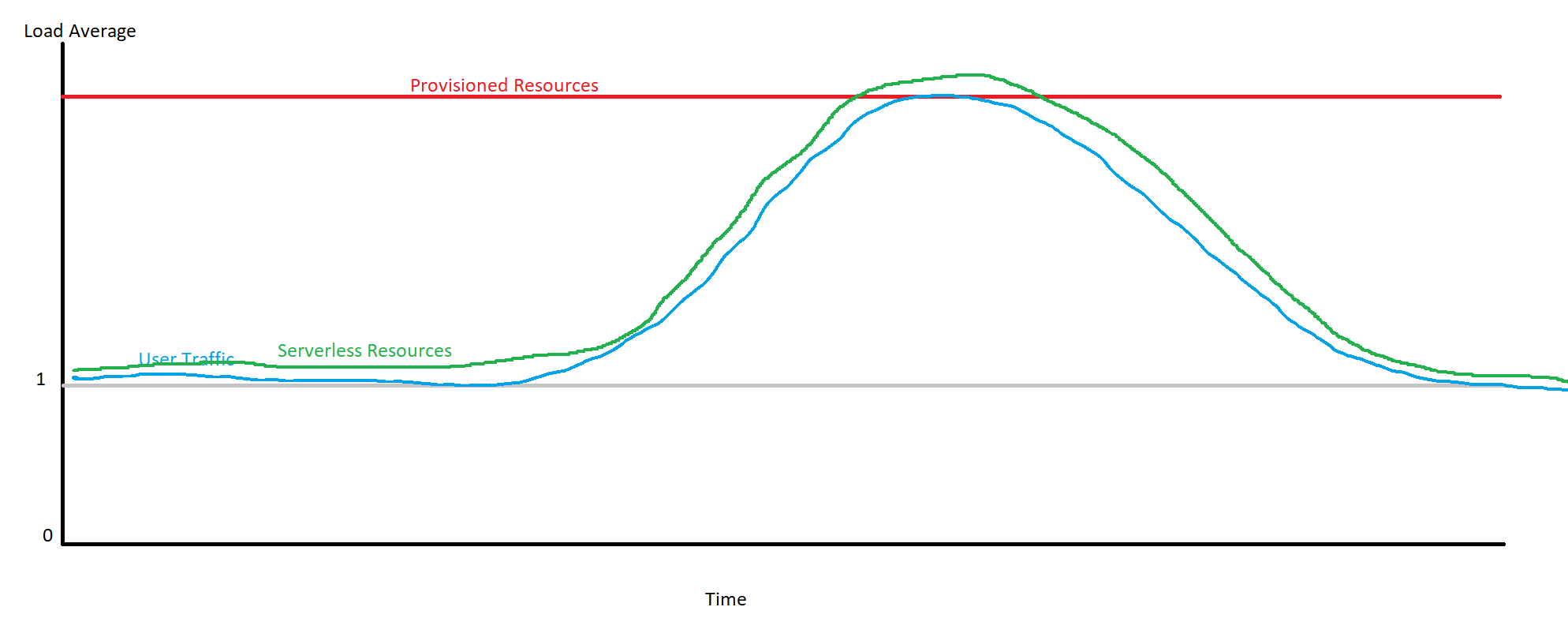

Scale to Zero is the concept of having no provisioned resources when you have no traffic. This has a large benefit of saving a ton of money, and not being wasteful. However, a downside is that there can be a lag between when a request comes in and resources are available to handle the request.

In a traditional infrastructure you would have to provision enough resources to handle the peak load. This means that (taking the 80-20 rule for sake of argument), you are not using your resources to their capacity 80% of the time. Yet you must pay to run these servers. Electricity must be used, coal burned, turbines spun, and the only output is unnecessary heat.

In aggregate at cloud scale, serverless resources can be provisioned dynamically and much more dense to use nearly 100% of the available power. Of course in reality the datacenter must keep a lot of hardware in standby ready to take up the load, however, this is minimized in the case of serverless.

Here is a very detailed graph of this concept, as an example.

I don't think everyone should rush to implement serverless architectures to try to save the planet – there are better reasons to go serverless – but the optimization in cost should be considered. Because you only pay for what you use, your cost structure can be related more directly to your usage patterns. If you are just starting out and have few users, your costs will be low. Your costs scale with you, and can be factored in to the pricing model, ideally.

Serverless scales to your credit card, and this allows you to focus on what really matters to the business.

Don't reinvent the wheel, focus on business value

There is a lot of technology involved in large enterprise infrastructure projects. A lot of networking, load balancing, firewalls, general logic, and things otherwise unrelated to generating income for your business, or unrelated to the problem you are solving.

So many of these parts of the infrastructure are not necessary to provision and manage with a serverless architecture. They have been done for you, there is no need to waste time and reinvent the wheel. This immediately gives businesses that adopt serverless from the start a leg-up. The engineers can focus on core value.

With a good software architecture and corresponding business architecture (Conway's Law), your development velocity should be much higher. There are fewer barriers between writing the software and delivering it to your customers.

Speaking of Conway's Law, a serverless pattern leans heavily into microservices by default. To implement a well architected solution requires a business organization that is complementary. This can have many benefits such as faster iterations with smaller teams, and fewer bugs since changesets are smaller.

Future Impact

There is no current standard for serverless computing. The International Data Center Authority mentions it in their AE360 paper (the compute layer), but this is not a robust standard.Because there is no standard, you have to decide between the three major cloud providers, or roll your own. There is the Open FaaS project that can help with functions, and you could pair this together other SaaS products, but you would need to write a lot of integration code.

There is a real concern of vendor lock-in, which really limits the ability to migrate, and introduces a bit of unpredictability. If serverless architecture is going to be the future, I expect more standardization around common patterns will be necessary. Another huge benefit to standardization is consolidation of tooling. Terraform providers, and each cloud's specific tools are great, but they lack features and are an obstacle to deploying infrastructure as code. This is also how cloud providers could benefit from standardization.

Things I hope to see standardized:

- Compute

- API

- Storage

- Caching

- CDN

- Messaging/Eventing

- Monitoring

- Analytics

Outside of standardization, I see more layers of abstraction being built up. For instance, in AWS, why should the user decide between RDS Aurora, DynamoDB, or Redshift? If there was a standard for serverless storage, a user could identify their access patterns and requirements, and have the proper infrastructure provided automatically.

Another method of abstraction would be to remove the notion of regions. Infrastructure could be moved around seamlessly to serve patterns of traffic automatically. Automatically provisioning instances of compute and replicating data to storage that is closer to the user.

Regardless of standardization or further abstractions, serverless is set to have a huge impact on computing. The industry is still growing rapdily, and more and more companies are moving to the cloud and going serverless.

Arguments Against

There are a lot of reasons why you should not go serverless. Vendor lock in, cost, knowledge, Capex vs Opex, and it may just not provide the functionality that is required for your problem or business.

These are all valid concerns and need to be considered when planning your next architecture or migration.

Vendor Lock In

This is the fear of putting all your eggs in one basket. Now that all of your infrastructure is in AWS, what happens when AWS raises their prices or removes a product that you are depending on? How quickly can you migrate away from your 14 TB RDS instance?

This fear is alleviated somewhat with more cloud providers. Competition provides a bit of incentive for prices to remain low and predictable, because it would be easy to undercut for similar or equivalent products.

With IaC, it becomes easier to replicate infrastructure in a competing cloud environment while still running in the existing one. Migrating your data is a trickier issue, but with careful planning and services like AWS Snowball, it is definitely possible.

There are also hybrid solutions. Perhaps you balance your infrastructure across multiple cloud environments. This is tricky to set up, and maintenance heavy, but if vendor lock in is a major concern, this is one alternative.

Cost

Cost can be make it or break it for a business just starting out, or one with tight margins. If you have a new business with unknown usage patterns, however, serverless can be a perfect fit. Since you can scale to zero, you can save a lot of money when your customers aren't using your platform.

If you are a business with tight margins, you probably have well-known and predictable access patterns. This is one case where serverless may not be the solution. If you can provision ahead of time the exact amount of resources required, then you can save a lot of money.

However, this allows no room for growth, at least not rapidly. You could find yourself in a position where you just can't handle the load the users are putting on your system. In that case, automatically scaling with cloud infrastructure could keep your business alive and making money.

A hybrid solution in that case may be the perfect fit. You have provisioned resources for the base load, but can burst into the cloud when necessary to handle surges of traffic. This requires some careful planning, but is definitely possible, though this strays away from serverless a bit.

Knowledge

Knowledge can easily be a barrier to entry to new technology. New things take time and persistence to learn. You may be hesitant to jump into serverless because there is just too much going on, and things are working just fine right now, thank you very much.

Fortunately, there are a lot of resources for learning this technology. From acloud.guru to linuxacademy, and all of the certificate training courses, you have not only the teaching material, but also the accreditation to show that you learned it.

One of the huge benefits to the computer software industry is that anyone can learn it if they put the time and effort it. It is a great equalizer. But it does require the effort.

Capex vs Opex

Your company may be unwilling to migrate to serverless, or the cloud in general, because it is not a capital expense. The company does not own anything for equity purposes, they are merely renting hardware. I am not a financial advisor or an accountant, but this seems like a silly argument. Any money saved by clever accounting for tax purposes could be overshadowed by the amount of money saved by having a more efficient infrastructure. Your company makes its money from software, and that software needs to run on something.

You can pay people money to maintain and build that infrastructure, or you could pay a third party to do it for you. There is a good chance that your staff of infrastructure, network, and software engineers to build and maintain that will be more expensive.

Missing functionality

If you have a niche business or scientific experiment, you may not find the features you require in the cloud. There may be special requirements for privacy, security, or strange computations that just aren't there. In some cases this is absolutely true.

For instance, I don't see a quantum computer class of EC2 instances coming to AWS any time soon. In a lot of other cases, however, reframing the problem to use the provided tools is possible, and may even lead to new, more efficient solutions.

Perhaps you do not need to calculate everything at once in a large simulation, and an eventually consistent event-driven architecture would get the job done as well. This is more of a case-by-case thing, but it is worth considering alternative approaches because they could turn out to be more cost effective and efficient.

Conclusion

Serverless is a great form of computing, and it is here to stay. The industry is still growing rapidly, and for good reason. Companies can save a lot of money and time by using only the resources they need, and cutting out self-hosted infrastructure solutions.

Money can be saved not only in total run time costs, but also in manpower. Staffing requirements can be lessened, or people can be moved onto solving the business goals that actually generate income.

Serverless is a great abstraction on infrastructure in general. It provides the tools to think in the solution space, instead of all the steps required to set it up.